Introduction

In the fast-evolving, data-driven world, organizations rely on robust data processing to derive actionable insights and maintain a competitive edge. At the core of this process lies the ETL (Extract, Transform, Load) pipeline, a critical framework for integrating data from diverse sources into a unified, analytics-ready format. An efficient ETL pipeline ensures seamless data management, enhances data quality, and accelerates time-to-insight, empowering businesses to make informed decisions. This article explores best practices for designing ETL pipelines, emphasizing key strategies in data science, big data, and data management to optimize performance and scalability.

Understanding ETL Pipelines

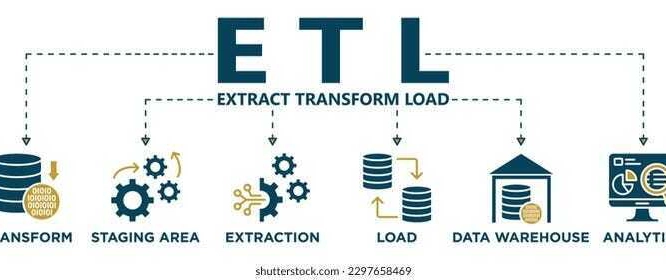

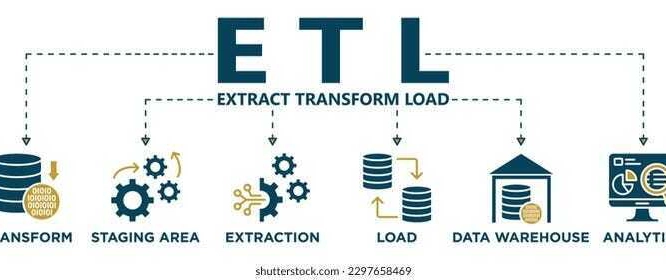

ETL pipelines are foundational to data integration, enabling organizations to aggregate, transform, and store data from varied sources for analytics, reporting, and business intelligence. The process involves three stages:

- Extraction: Data is retrieved from sources such as databases, APIs, files, cloud storage, or streaming platforms. This raw data is extracted while preserving its original structure and integrity.

- Transformation: The extracted data is processed to ensure consistency, quality, and compatibility with the target system. Transformations include cleaning, filtering, aggregating, and enriching data to align with business needs.

- Loading: Transformed data is transferred to a destination, such as a data warehouse, data lake, or database, where it is ready for downstream applications like analytics or machine learning.

By harmonizing disparate data sources, ETL pipelines support data-driven decision-making, making them indispensable in data science and big data workflows. Designing efficient pipelines requires a deep understanding of their components and adherence to best practices to ensure reliability, scalability, and performance.

Key Components of an ETL Pipeline

An effective ETL pipeline comprises several interconnected components that facilitate seamless data flow:

- Data Sources: These include relational databases, NoSQL systems, APIs, flat files, or real-time streams. Identifying relevant sources ensures comprehensive data capture for analytics.

- Extraction Mechanism: This handles data retrieval using methods like batch processing, real-time streaming, or change data capture (CDC). Efficient extraction minimizes latency and resource usage.

- Transformation Engine: The engine processes raw data through cleaning, normalization, aggregation, or enrichment, ensuring it meets the destination’s requirements and maintains high quality.

- Data Loading: Loading mechanisms, such as bulk, incremental, or streaming, deliver transformed data to targets like data warehouses or lakes, optimized for specific use cases.

- Orchestration and Workflow Management: This coordinates pipeline tasks, managing execution order, scheduling, error handling, and monitoring to ensure smooth operation.

Optimizing these components is critical for building robust ETL pipelines that support big data initiatives and deliver reliable insights for data management.

Best Practices for Designing Efficient ETL Pipelines

To maximize efficiency, scalability, and reliability, organizations should adopt the following best practices for ETL pipeline design:

1. Define Clear Objectives and Requirements

Start by outlining the pipeline’s goals, including data sources, transformation logic, and target destinations. Align these with business objectives, such as real-time analytics or compliance reporting. Clear requirements guide the pipeline’s architecture, ensuring it meets data science and business intelligence needs.

2. Optimize Data Extraction

Choose extraction methods based on data volume and latency requirements. For example:

- Incremental Extraction: Retrieve only new or updated data using timestamps or CDC to reduce processing overhead.

- Partitioned Extraction: Break large datasets into smaller chunks to improve efficiency.

- Source-Specific Optimization: Use native connectors or APIs to minimize latency and ensure compatibility with big data sources.

These techniques enhance data management by reducing resource consumption and speeding up extraction.

3. Streamline Data Transformation

Transformation is often the most resource-intensive stage. Optimize it by:

- Incremental Processing: Break transformations into smaller, modular steps to improve scalability.

- Parallel Processing: Distribute tasks across multiple nodes or threads to leverage computational resources.

- Data Partitioning: Split large datasets into manageable segments for faster processing.

- Reusable Transformation Logic: Use templates or libraries to standardize common transformations, reducing development time.

These strategies ensure efficient handling of big data, improving pipeline performance and data quality.

4. Prioritize Data Quality

High-quality data is essential for reliable insights. Implement:

- Data Profiling: Analyze data to identify anomalies or inconsistencies before transformation.

- Validation Checks: Validate data at each stage to catch errors early.

- Cleansing and Deduplication: Remove duplicates and correct errors to ensure accuracy.

- Metadata Management: Track data lineage and transformations to support compliance and auditing.

These practices enhance data management, ensuring trustworthy outputs for data science applications.

5. Optimize Data Loading

Select loading methods that align with the target system’s capabilities:

- Bulk Loading: Ideal for large, infrequent data transfers to data warehouses.

- Incremental Loading: Suitable for frequent updates to minimize latency.

- Streaming: Enables real-time data loading for dynamic analytics.

Optimizing loading reduces bottlenecks and ensures timely data availability for analytics.

6. Implement Robust Monitoring and Logging

Continuous monitoring is critical for identifying performance issues. Use tools to track:

- Resource Utilization: Monitor CPU, memory, and storage usage.

- Data Throughput: Measure data processing rates to identify slowdowns.

- Latency Metrics: Track delays to ensure timely data delivery.

Logging errors and performance metrics enables proactive tuning, enhancing pipeline reliability and big data processing efficiency.

7. Automate Workflow Management

Automation streamlines pipeline operations. Use orchestration tools like Apache Airflow or AWS Step Functions to:

- Schedule tasks for consistent execution.

- Handle errors with automated retries or alerts.

- Manage dependencies to ensure proper task sequencing.

Automation reduces manual effort, improving data management and operational efficiency.

8. Design for Scalability and Fault Tolerance

Build pipelines to handle growing data volumes and failures:

- Scalability: Use cloud-native or distributed frameworks (e.g., Apache Spark) to scale with big data demands.

- Fault Tolerance: Implement redundancy, checkpointing, and failover mechanisms to ensure uninterrupted processing.

- Retry Logic: Automatically retry failed tasks to minimize disruptions.

These measures ensure pipelines remain robust under varying workloads, supporting data science scalability.

9. Secure Data Pipelines

Protect sensitive data by:

- Encrypting data in transit and at rest.

- Implementing access controls and authentication.

- Ensuring compliance with regulations like GDPR or CCPA.

Security is a cornerstone of data management, safeguarding data integrity and trust.

10. Test and Validate Pipelines

Regularly test pipelines to ensure reliability:

- Unit Testing: Validate individual components like transformations or loading scripts.

- Integration Testing: Test the entire pipeline for end-to-end functionality.

- Stress Testing: Simulate high data volumes to assess scalability.

Testing ensures pipelines meet data science and business intelligence requirements.

Challenges and Considerations

Designing ETL pipelines involves navigating challenges like:

- Data Volume and Velocity: Big data environments require scalable architectures to handle increasing data loads.

- Heterogeneous Sources: Integrating diverse data formats demands flexible extraction and transformation logic.

- Latency Requirements: Real-time analytics may necessitate streaming pipelines over batch processing.

Addressing these challenges requires balancing performance, cost, and complexity while aligning with data management goals.

Tools and Technologies for ETL Pipelines

Modern ETL pipelines leverage tools like:

- Apache Spark: For distributed processing of big data.

- Apache Airflow: For workflow orchestration and scheduling.

- Talend or Informatica: For enterprise-grade ETL solutions.

- Cloud Platforms: AWS Glue, Google Cloud Dataflow, or Azure Data Factory for scalable, cloud-native pipelines.

Selecting the right tools enhances efficiency and supports data science initiatives.

Conclusion

An efficient ETL pipeline is vital for unlocking the full potential of data in today’s data-driven landscape. By defining clear objectives, optimizing extraction and transformation, prioritizing data quality, and embracing automation and scalability, organizations can build robust pipelines that deliver timely, accurate insights. Continuous monitoring, security, and testing further enhance reliability, ensuring pipelines meet the demands of data science, big data, and data management. With these best practices, businesses can streamline data integration, accelerate decision-making, and maintain a competitive edge.